Recently, I'm becoming increasingly fascinated by the seemingly endless possibilities of monitoring things. I think that it all started with learning Zabbix 3.x and starting to use it instead of the old-schooler Nagios. But those two tools are somewhat standard monitoring tools among many others of their type.

Lately, full-text-search instruments such as ElasticSearch are all the rage in the industry. And for a good reason - they are simply awesome for viewing, searching and analysing all sorts of data. They are going to be even more so when all the new features announced on Elastic{ON} are implemented and live.

So, you want to check out the goodies? Let me summon some views using buzzword magic: Let's run a scalable ElasticStack Cluster using Docker and Docker-Compose in three simple steps!

Channelling my inner buzzwords-lover

I'm a 'docker-scepticist'. I am a little afraid of running Docker in production and even more of upgrading it once it goes live, but I love it for running quick and (sometimes) dirty tests and development environments. After finishing a Docker for Developers and System Administrators Training lately, I felt compelled to try using the newly acquired knowledge of docker-compose for exploring elasticsearch's possibilities.

You can find the code on my GitHub.

ElasticStack itself consists of so many different products now that it's getting hard to describe what it is. At its core, it's a full-text search engine with the whole ecosystem of tools built around it. I recommend reading the linked page about the products for a general introduction. Later in the series, I will show some of the more fun things to do with it. For now, I'll show you a way of getting it up and running in no time and without (too much) pain.

Disclaimer: There is only one thing that annoys me more than Docker itself - talks about Docker which keep on explaining how it is different than jails, Linux Containers, why layers are cool, how Copy-On-Write works, etc. I will just assume that you know the basics or you don't care about them and want to proceed with running the ElasticStack.

Three simple steps!

Don't do this on production, please!

$ git clone https://github.com/wurbanski/elasticstack-demo.git$ cd elasticstack-demo$ docker-compose up -d

And there goes your cluster! Right? You can run docker-compose ps and see something like this:

$ docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------------------------

elastickstackdemo_elasticsearch1_1 /bin/bash bin/es-docker Exit 78

elastickstackdemo_elasticsearch2_1 /bin/bash bin/es-docker Exit 78

elastickstackdemo_elasticsearch3_1 /bin/bash bin/es-docker Exit 78

elastickstackdemo_kibana_1 /bin/sh -c /usr/local/bin/ ... Up 0.0.0.0:5601->5601/tcp

If that's not what you see, you can skip the following part. Else...

'What the heck? Why are my elasticsearches down?' - You might be asking - 'That wurbanski guy ripped me off my money!'. Fear not, for I am a good man and I will lead you out of your misery :). Let's see what's going on:

$ docker logs elasticdemo_elasticsearch1_1

### yadda yadda, lotsa java

ERROR: bootstrap checks failed

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

### something

Elasticsearch containers have exited with an error due to limits on your host machine (unless you have already raised them earlier).

The fix is easy - just run sudo sysctl -w vm.max_map_count=262144 on your machine as per the docs. This change won't be permanent though and won't persist through reboots.

Now everything should be working just fine:

$ docker-compose ps

Name Command State Ports

--------------------------------------------------------------------------------------------------------------

elastickstackdemo_elasticsearch1_1 /bin/bash bin/es-docker Up 0.0.0.0:9200->9200/tcp, 9300/tcp

elastickstackdemo_elasticsearch2_1 /bin/bash bin/es-docker Up 9200/tcp, 9300/tcp

elastickstackdemo_elasticsearch3_1 /bin/bash bin/es-docker Up 9200/tcp, 9300/tcp

elastickstackdemo_kibana_1 /bin/sh -c /usr/local/bin/ ... Up 0.0.0.0:5601->5601/tcp

What do we have here?

The setup at the time of writing consists of following pieces:

- Kibana node (for data visualisation)

- Three

little pigsElasticSearch nodes.

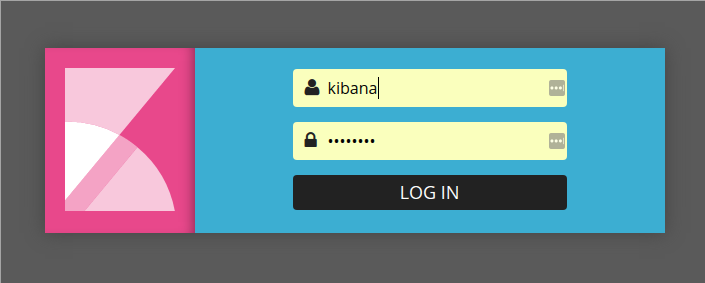

You can now point your browser to http://localhost:5601 to see Kibana's login screen:

This version of Kibana container uses X-Pack, so login is required. The default login:password for the admin user is elastic:changeme and kibana:changeme for a "normal" user. Of course, I didn't change it for you, so once again: don't run this in production.

The other working thing is elasticsearch nodes. You can access one of them and see the list of connected nodes of the cluster by requesting http://localhost:9200/_cat/nodes and check it's health at http://localhost:9200/_cluster/health?pretty:

$ curl 'localhost:9200/_cluster/health?pretty' -u elastic:changeme

{

"cluster_name" : "docker-cluster",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 4,

"active_shards" : 8,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

What more can you do? You can have fun following the Getting Started (well, you don't need to install anything now, so you can skip that part :)).

All the fun and games in docker-compose

Now, let's move on to what is happening on under the cover, in docker-compose.yml. Let's leave the best for the end, shall we? Starting with the backbone:

Network definition

networks:

es_net:

driver: bridge

ipam:

driver: default

config:

- subnet: 10.7.7.0/16

gateway: 10.7.7.1

I have configured a bridged network named es_net to contain all the nodes from the cluster. It has been given subnet of 10.7.7.0/16 and dynamic address allocation is enabled.

Data volumes

volumes:

esdata1:

driver: local

esdata2:

driver: local

esdata3:

driver: local

Each of the nodes will have a separate data volume with the local driver. This is the default driver used in Docker, but I prefer to specify this explicitly rather than count on correct implicit value.

Kibana node

kibana:

image: docker.elastic.co/kibana/kibana:5.3.0

networks:

es_net:

ports:

- "5601:5601"

The container with kibana is set explicitly to version 5.3.0 and connected to the network es_net. Also, since we will want to use it to discover the data, we provide port mapping for port 5601, which is the port used for the Web UI.

ElasticSearch nodes

elasticsearch1:

image: docker.elastic.co/elasticsearch/elasticsearch:5.3.0

networks:

es_net:

aliases:

- elasticsearch

mem_limit: 1g

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ports:

- "9200:9200"

volumes:

- esdata1:/usr/share/elasticsearch/data

elasticsearch2: # and elasticsearch3

# Showing the differences only

environment:

- "discovery.zen.ping.unicast.hosts=elasticsearch1"

networks:

es_net:

First and foremost: I had limited RAM usage for the containers with ES nodes to 1 GB and decreased the heap size to 512 MB. ElasticSearch is a Java application and loves to eat up a lot of memory. I've locked my machine twice trying to run it with default settings of 2 GB heap and no RAM limit. Running 3 of those containers together with the Web Browser and Slack client has surely made a hit on my 8 GB of RAM :-). This way it's safe and still works. Yay!

Of course, the nodes are connected to es_net and have their specific volumes mapped. Node elasticsearch1 has an additional alias of elasticsearch, which is used by kibana. The other nodes have the network address of the first node specified through the environment variable discovery.zen.ping.unicast.hosts. This is for all the nodes to try and connect to the same cluster.

Getting the data to ElasticSearch

In the next articles about elasticsearch, I will try to show some ways of getting your data from applications to ElasticSearch and using Kibana to get some meaningful analysis from it.

This project was created as a demo for my friends at Students' Campus Computer Network and will be accompanied by some slides once it's finished.

Until the next time!